Expressions like "Data is the new oil" are already becoming a cliché on social media, but there is still a lot of truth to them. Obviously with customer centricity and hyper-personalization increasing, data gets more and more value. However just like raw oil, data in its raw form has little usage. It needs to be cleaned, filtered, structured, matched and enriched, consent needs to be captured from the data owner (via GDPR compliant opt-in requests) and the data needs to be analysed before it can give its value in the form of new (customer) insights.

This is where most companies in the financial services sector are facing issues. While collecting and storing raw data is relatively easy, ensuring high quality data sets (avoiding the "Garbage In – Garbage Out" principle) and converting them into insights is a whole different story. Most financial institutions are facing a lot of data quality issues, i.e. most of their data is mostly right, most of the time:

- According to a Harvard Business Review study, only 3% of companies’ data meets basic quality standards

- According to Digital Banking Report, 60% of financial institution executives indicate that the quality of data used by marketing and business intelligence areas was either unacceptable (22%) or acceptable but requiring significant additional support (38%).

- Gartner reports that poor data quality is a primary reason for 40% of all business initiatives failing to achieve their targeted benefits

- Experian reports that 33% of customer or prospect data in financial institutions is considered to be inaccurate in some way.

- …

It should therefore come as no surprise that the importance of "Good Quality Data Sets" has considerably increased in the last years. The 1-10-100 rule applies here, i.e. if it costs you R1 to capture the information, it will cost you R10 to correct it and R100 to resolve the issues caused by any incorrect information.

Especially in the financial industry, where correct data is the foundation of trust and trust being the most precious asset of a financial company, data quality needs to be good or even excellent. Data quality problems lead to wrong decisions both tactically and strategically (unreliable analytics), to increased operational costs (e.g. incorrect addresses increase mail costs for a bank) and damage in customer reputation, it blocks the usage of new AI/ML models (or exponentially increases the cost price of such projects) and can result in high regulatory fines (when it comes to data related to KYC, AML, GDPR, MiFID, Basel II/III, Solvency II…)

As a result, new players are trying to provide an answer to this, like solutions automating data capture (like OCR and RPA solutions, KYC automated workflows…) or platforms for data governance (like Collibra, Datactics, Soda, OwlDQ, Monte Carlo, Bigeye, Attacama…), but also specialized vertical solutions, which try to improve specific data sets, like the Securities Master File (like TheGoldenSource, Broadridge, MarkIt EDM…), the Customer Master File (like specialised CRM packages) or the data sets required for KYC (like iComply, Docbyte, KYC Portal…) or AML (like AMLtrac, Clari5, FinAMLOR…).

Apart from those solutions which try to improve the governance and quality of the data internally generated in a financial institution, there are of course also dozens of players who provide high-quality external datasets for specific domains, which are ready to use. These so-called external data providers will also grow in importance.

Data providers capture data from different sources (including manual input) and execute all necessary steps to improve the data quality (like validation, transformation, matching, enriching, filtering…). Afterwards they sell this cleaned and structured data sets to third parties. Their business model comes obviously from the scaling effect, i.e. instead of every financial institution doing this effort by itself, a data provider can do this work once and sell it to several financial institutions.

Still data providers have the reputation to be expensive, resulting in many financial institutions still assembling certain data sets themselves. This is because an internal data collection and processing seems cheaper but has a lot of hidden costs which are not taken into account in the equation. If all costs are correctly accounted for, a data provider will almost always be cheaper, thanks to their scaling effect and their focus (resulting in specific skills and tooling making the work more efficient).

Data providers exist in all forms and sizes, like

- Government (associated) institutions, like in Belgium the KBO/BEC, the National Bank, Staatsblad (SPF Justice), Identifin or Social Security databases

- BigTech companies, like Google, Facebook or LinkedIn

- Specialized niche players for customer, risk and financial data, like Data.be, World-Check, Bureau Van Dijk (Moody’s Analytis), Graydon, CrediWeb, Creditsafe, North Data, Bisnode, Infocredit Group, Probe Info, ICP…

- Specialized niche players for car (insurance) information, like in Belgium the DIV (official instance for registrating a vehicle), Veridass, IRES (Informex), FEBIAC (Technicar) or RSR (Datassur)

- Large international data providers of financial data, like Bloomberg, Refinitiv, Morningstar, MarkIt, SIX Financial Information, FactSet, EDI, MSCI, S&P, FTSE, Russell, Fitch, Moody’s…

- …

Although these data providers are likely to increase in importance, they are also facing several issues:

- Customers are demanding higher quality data set. As such data providers need to improve (or even reinvent) their current processes, via data profiling and monitoring, extensive data validations, data enrichments…

- With data becoming more and more freely accessible in a quite well-structured way on the internet, it is no longer sufficient for a data provider to offer raw data. Instead data providers need to bring added value, e.g. in the form of data cleaning and structuring actions, but also by delivering derived results from the data, like calculated figures, ratios and KPIs. E.g. instead of just providing securities prices, a securities data provider can also provide the mean price over the last week/month, the variance in the price or the VaR of a security.

Idem for financial data about companies. Instead of just providing access to the annual report data, all kinds of ratios can be calculated, like Solvency ratios (like Current and Quick ratio), Asset Management ratios (like Inventory Turnover or Days' Receivables), Debt Management Ratios (like TIE Ratio or Equity Multiplier) or Profitability Ratios (like ROA or ROE) - With flexibility, accuracy and speed becoming more important, data providers need to provide more real-time integrations via simple and standardized integration patterns, like REST APIs, webhooks… Today many data providers are still working with daily batches of files with a very proprietary and complex format, which is difficult and costly to integrate. This will rapidly change thanks to Open Data protocols (like REST APIs, OAuth2 authentication…).

Additionally API (data provider) marketplaces will rise, which allow to authenticate in a unique way for different data providers and profit of value-added services, like single billing, usage optimizations, fallback solutions to other data providers (in case of unavailability)… - Data providers are requested to give more transparency and visibility on the usage and associated pricing linked to the data consumption of a customer. Customers want to pay only for what they use, but at the same time have tooling to keep their bill under control. E.g. the Google APIs seem to have a low cost per API call, but when poorly implemented and high usage, the amount of the monthly bill can exponentially increase very rapidly (thus becoming very expensive).

- As customers want to pay only for the data they use, good subscription models are required. These are models which automatically push any data updates (e.g. via a webhook) for entities on which a consumer is subscribed, but at the same time allow to define detailed selection (filtering) criteria to get notified of new data records fitting the condition. E.g. an asset manager might want to be notified about securities data updates only for securities in position, but at the same time also offer its customers to trade on any security (also those not yet in position of any customer) on specific stock markets.

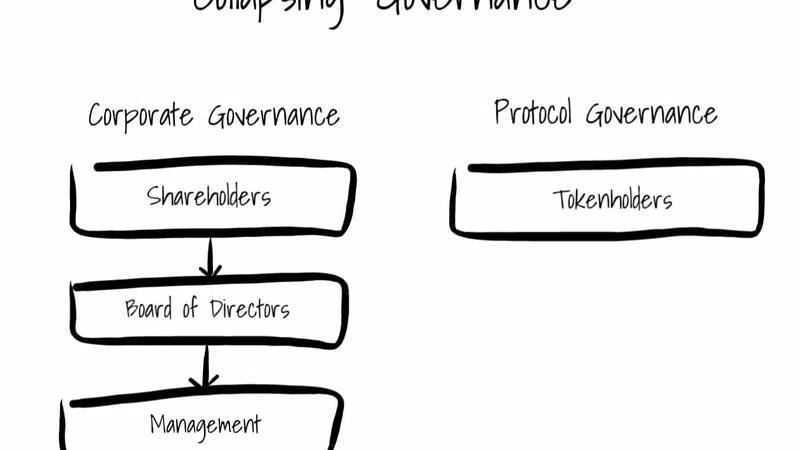

- Data providers are struggling to define a good license model. With data being processed and transformed dozens of times in a financial institution, it becomes difficult to know the origin of data. As such it becomes very difficult to check if data licensed only for 1 institution, is in fact not used for multiple institutions (within the bank group) or even sold by the bank itself to third parties. Most data provider strongly restrict what can be done with the data provided to data consumers, but this is often extremely difficult to enforce (especially for smaller data providers selling data to large financial institutions).

- With BigTech, like Google, Facebook and LinkedIn, collecting enormous amounts of data on a daily basis and having all expertise to process this Big Data, these firms are ideally positioned to act as a data provider. Especially as they can convince its users to check/correct/update their data themselves, allowing to have a crowdsourced data quality acceptance process.

- A major issue for data providers remains the lack of a common(world-wide accepted) unique identifier for data entities. E.g. for a simple entity like a person, company, point of sale or securities, it is already nearly impossible to find a common identifier. E.g. a person can be identified by his name, first name and birth date, but often this is not unique enough. Another way is via his National Register Number or ID card number, but this information is often not permanent (e.g. temporary national register number or expiring ID cards) and/or very regulated. Other options are the Google, Facebook or LinkedIn identifier, but not every person has an account on those platforms and often there is no formal identification (verification of identity) on those platforms.

However these issues will certainly be overcome by data providers, meaning these firms will continue to grow in the future and the data provided by them will become a commodity (i.e. a non-differentiating factor) for financial institutions. The enrichments financial institutions will be able to bring and the insights/analytics they will be able to derive from all data will allow financial companies to further compete in the domain of data management.