The justice system is constantly seeking ways to become more effective. As a result, the sector has begun to explore the possibilities of using AI to develop a more organised justice system. While using AI in a field such as law may initially seem like a sensible idea, due to the human-centric nature of the industry there are still a number of issues that need to be addressed.

What is AI?

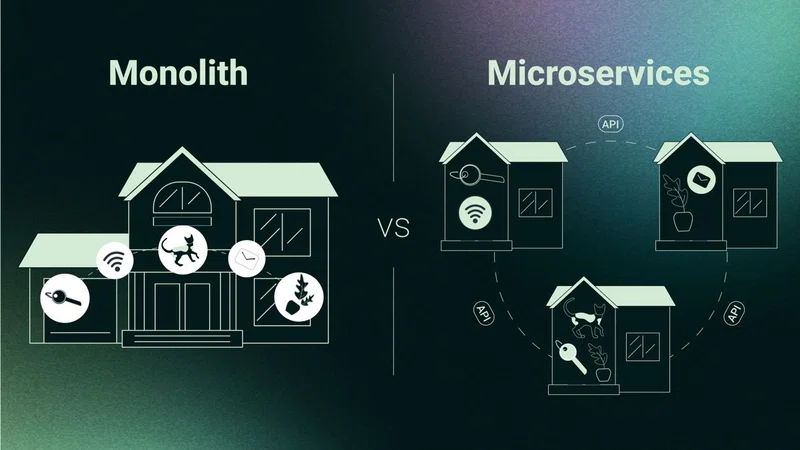

AI is the ability of a digital computer or computer-controlled robot to carry out actions that mimic the functions of human intelligence. As a result, applications of artificial intelligence commonly imitate human behaviour; for instance, the biometric facial recognition technology included in many of our phones imitates our own capacity to recognise various facial features.

However, AI can only mimic a small percentage of the functions of real intelligence and so this can only be seen as a vague definition of the capabilities of an AI programme. The key parameter by which AI is judged is rationality. The rationality of an AI system is facilitated by its reasoning module, which interprets data from the sensors and uses that information to create an action in pursuit of a set goal. Fundamentally, what makes a good AI system is the ability of the reasoning module to interpret the data and achieve the goal quickly and efficiently.

AI in law enforcement

In recent years, those involved in the criminal justice process have looked towards various forms of AI to investigate possible suspects, provide deterrents and reduce delays in the justice system. This mostly takes the form of facial recognition technology, which can be analysed and compared to databases of criminals to find a match. Another use is for crime prevention means, in which AI technology that is trained to monitor body movements is used to detect suspicious behaviour and ensure public safety.

AI in legal practice

As well as law enforcement, AI has been implemented in legal practices across the world. These AI tools are currently only suitable for low-level automated tasks, but they create extra efficiencies and allow lawyers more time to concentrate on high level tasks. For example, e-discovery software means that lawyers are able to scan documents and filter them via specific parameters, like dates or geographic locations. Legal research software means that lawyers can gather data to help them understand precedents and legal trends. These AI tools mean that lawyers are able to conduct more comprehensive research at faster speeds, saving them time and their clients money. Finally, AI can also be used to automate manual, labour-intensive and time-consuming tasks such as administration that are less cognitively challenging, and thereby help to improve efficiency across a firm.

AI in a court of law

With AI already being used in some legal practices, what could this technology mean for the future of our justice system? Is it ethical to put this technology in our courts? What dangers could AI pose?

AI tools are already having an impact in courts of law through their use in law enforcement. For example, AI in forensic testing has vastly improved the accuracy and speed with which forensic laboratories can undertake DNA testing and analysis. This has been especially beneficial for teams that are processing low-level or degraded DNA evidence, as AI has been able to successfully analyse samples which could not have been used a decade ago.

AI and sentencing

One area of controversy is the use of AI in sentencing. Tools powered by AI have been used in American courts of law to help calculate the sentence a convicted person should receive. Using risk assessment tools that analyse the details of a defendant’s crime and their profile (for example, their offence history), the AI awards a score based on those details and the likelihood that the person will reoffend. A low score highlights that a defendant is low risk, whereas a high score determines that the defendant is likely to reoffend, and the judge will use this score to help them decide what kind of sentence to impose on the defendant. In addition, the score will be used to inform a variety of additional decisions, such as whether to offer a defendant access to rehabilitation services. It will also assist a judge in determining whether or not the defendant poses a flight risk and should be detained in custody, rather than released on bail, prior to trial.

The rationale for using these kinds of algorithmic tools is that, by precisely forecasting criminal behaviour, authorities may deploy resources in the most efficient ways - although there is no evidence to show that accurate forecasting is possible using current technologies. Theoretically, eliminating the human element from the allocation of resources will also lessen any potential prejudice. Instead of relying on their own experience and guideline documents, judges are now basing their choices on the AI tool's recommendations, which are supported by historical evidence.

However, historical crime data is also subject to well-understood biases, and as a result, these AI tools may not be as unbiased as necessary to make such important decisions.

When making judicial recommendations, AI looks for patterns based on statistical correlations to produce a score. The AI tool can only identify correlation, if there is one, and cannot analyse causation efficiently. For instance, if the AI tool discovered that individuals with the name John had a higher rate of absconding than any other over time, it might propose that they should be less likely to be released on bail. This not only risks violating the rights of those people named John, but it also shows that the AI does not have the capacity to understand the reasons why people abscond. The data is ineffectively analysed by the technology, and if recommendations are mindlessly followed, the justice system may become worse rather than more effective.

The justice system’s historical bias exacerbates this problem. Members of minority groups have long faced discrimination from law enforcement and the legal system due to ingrained prejudices. Because of this, members of the impacted minority groups are more likely to be arrested and are subject to "disproportionate prosecutions, unjust trials, and disproportionately severe penalties on criminal accusations”, according to Human Rights Watch. When applied to an AI tool that finds statistical correlations, these biases that have traditionally existed in justice systems around the world could have disastrous results. This machine learning system has the ability to magnify even the slightest tendency towards discrimination of any kind, undoing any gains made in the battle against bias in the legal system.

With our current technology, it is impossible to develop a perfect AI - meaning a tool that can contend with the abilities of a specialist while also mitigating any systemic biases - due to the complexity of the intelligence required. Without a "perfect" AI that is intelligent enough to analyse historical data objectively, it is not a technology that can be ethically incorporated into our judicial system currently. Since the justice system plays such a significant role in our lives, we cannot employ technology to improve its processes until we are certain that it won't have a negative effect on the most vulnerable members of our society. At the moment, the most ethical implementation we could consider would be to use a low-level and cognitively simple AI tool to automate procedures and increase efficiency.